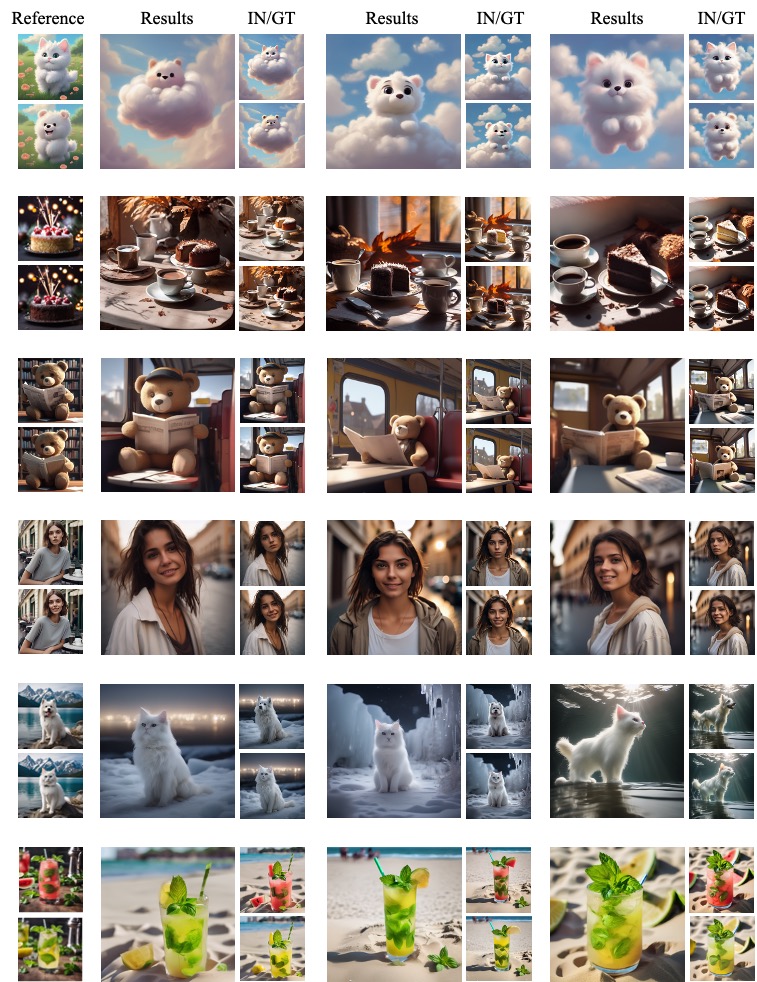

Exemplar image pairs

[  ,

,  ]

]

Exemplar image pairs

[  ,

,  ]

]

Exemplar image pairs

[  ,

,  ]

]

Exemplar image pairs

[  ,

,  ]

]

Exemplar image pairs

[  ,

,  ]

]

Exemplar image pairs

[  ,

,  ]

]

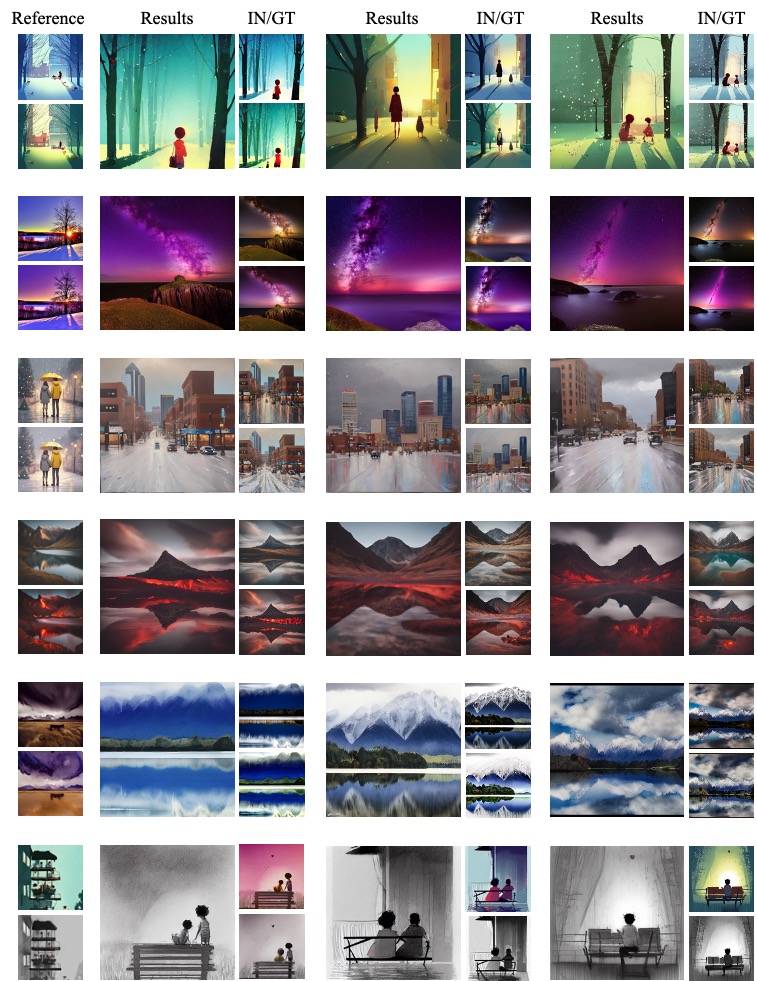

Exemplar image pairs

[  ,

,  ]

]

Exemplar image pairs

[  ,

,  ]

]

Exemplar image pairs

[  ,

,  ]

]

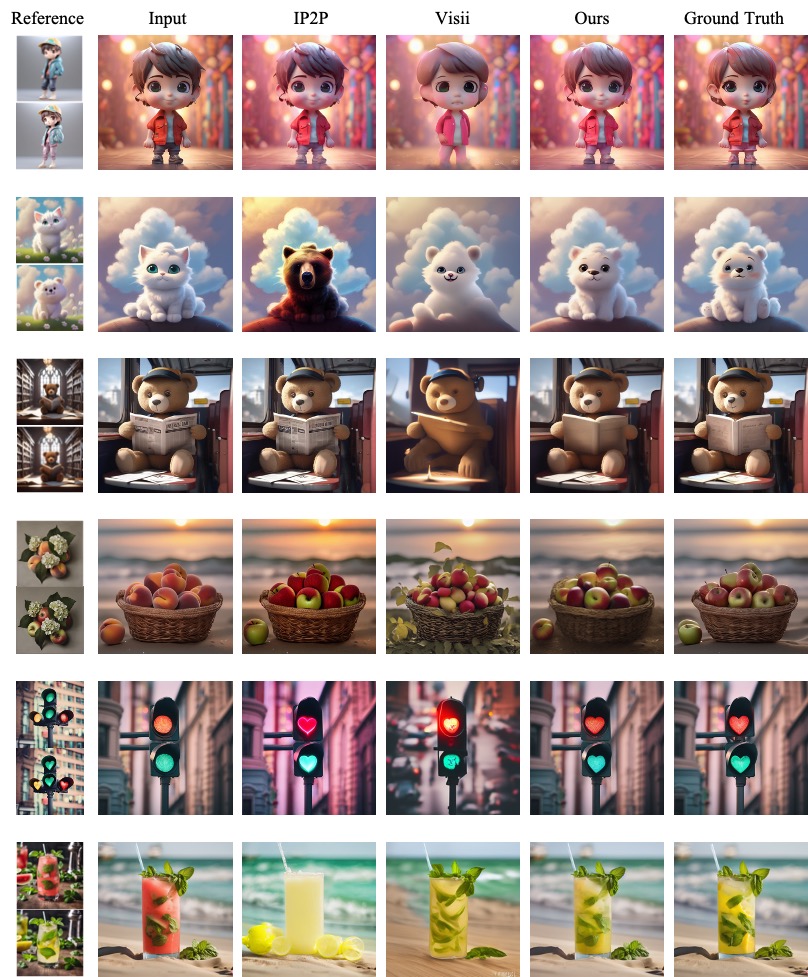

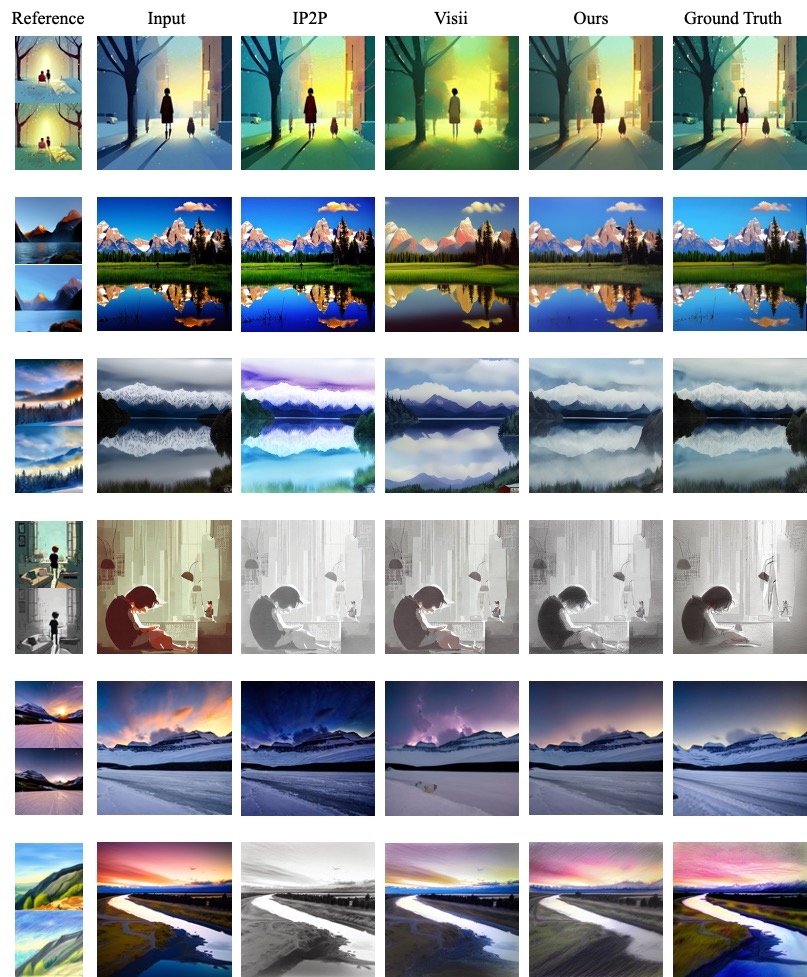

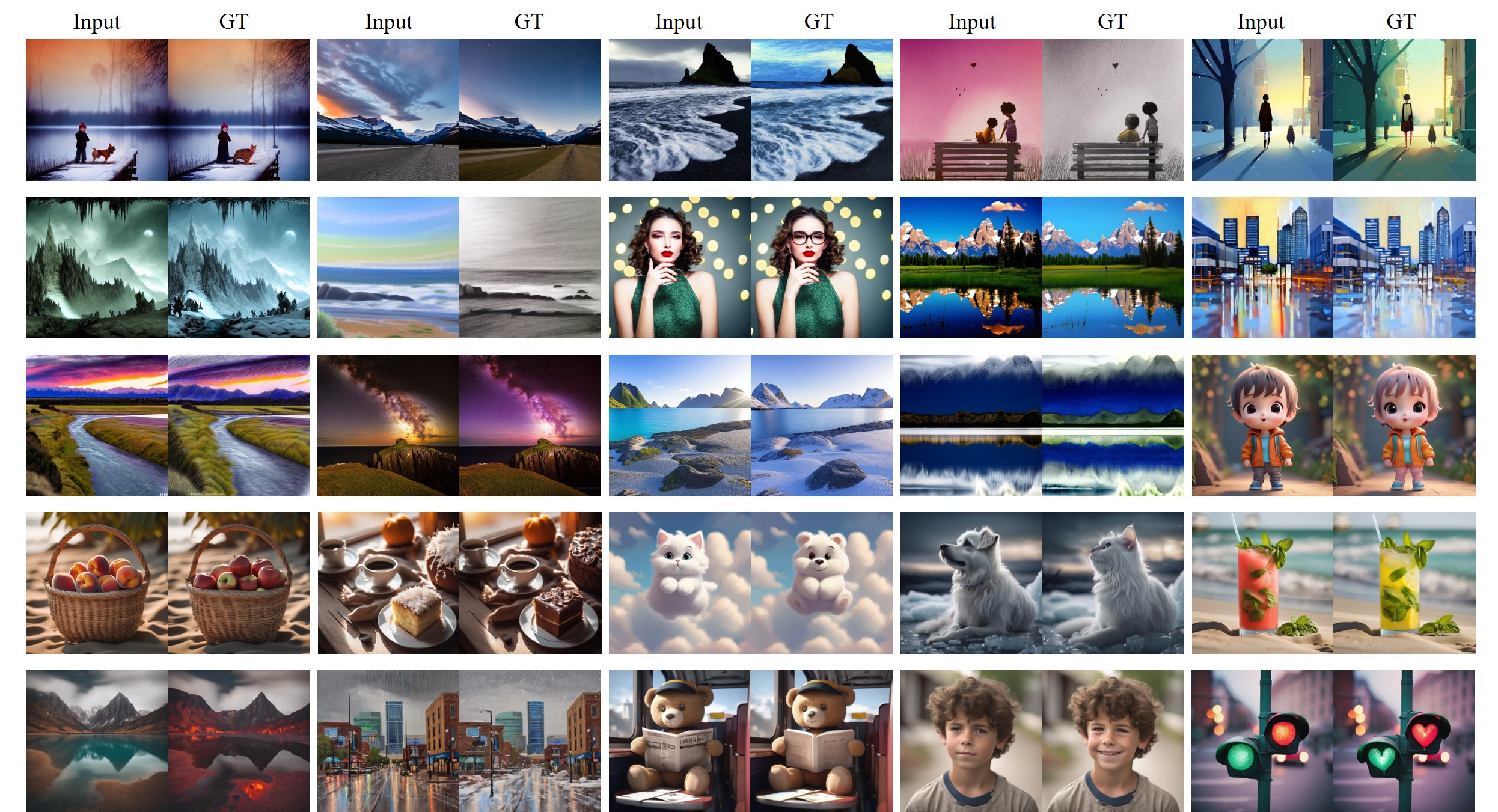

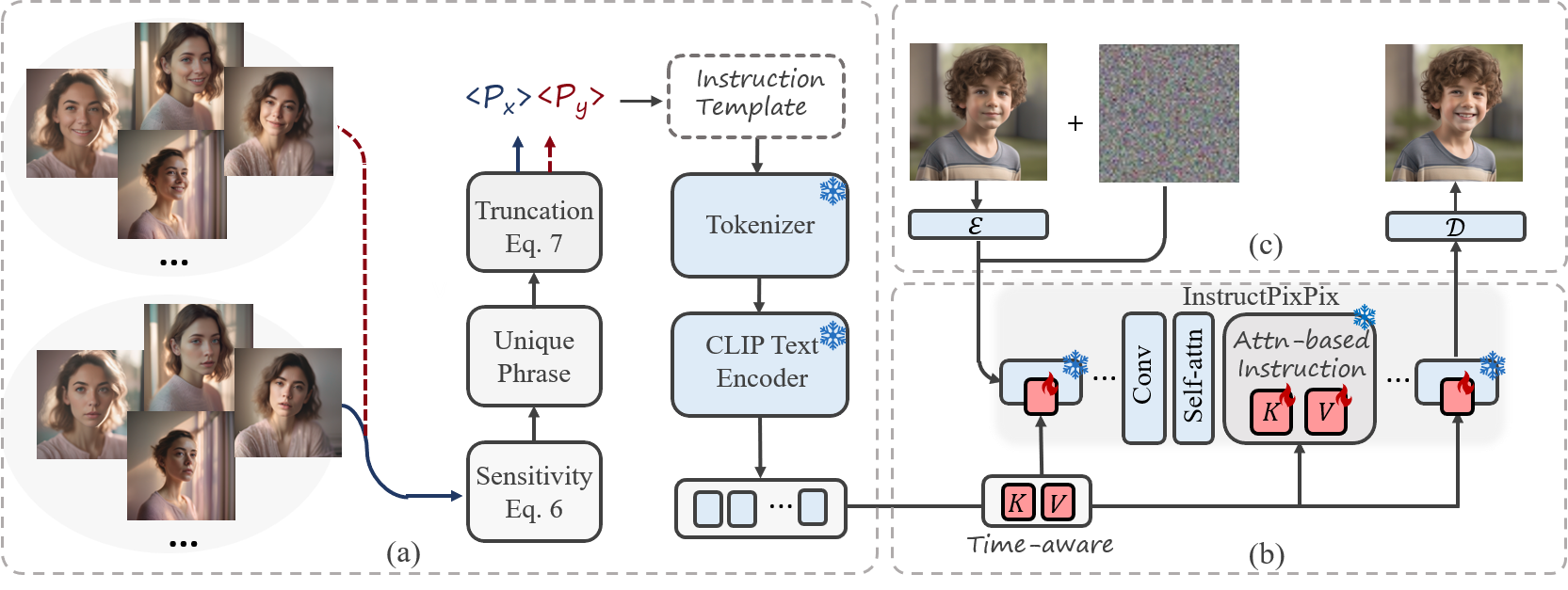

In recent years, instruction-based image editing methods have garnered significant attention in image editing. However, despite encompassing a wide range of editing priors, these methods are helpless when handling editing tasks that are challenging to accurately describe through language. We propose InstructBrush, an inversion method for instruction-based image editing methods to bridge this gap. It extracts editing effects from exemplar image pairs as editing instructions, which are further applied for image editing. Two key techniques are introduced into InstructBrush, Attention-based Instruction Optimization and Transformation-oriented Instruction Initialization, to address the limitations of the previous method in terms of inversion effects and instruction generalization. To explore the ability of instruction inversion methods to guide image editing in open scenarios, we establish a Transformation-Oriented Paired Benchmark (TOP-Bench), which contains a rich set of scenes and editing types. The creation of this benchmark paves the way for further exploration of instruction inversion. Quantitatively and qualitatively, our approach achieves superior performance in editing and is more semantically consistent with the target editing effects.

@article{zhao2024instructbrush,

title={InstructBrush: Learning Attention-based Instruction Optimization for Image Editing},

author={Zhao, Ruoyu and Fan, Qingnan and Kou, Fei and Qin, Shuai and Gu, Hong and Wu, Wei and Xu, Pengcheng and Zhu, Mingrui and Wang, Nannan and Gao, Xinbo},

journal={arXiv preprint arXiv:2403.18660},

year={2024}

}